Time Management & Localization Tools

Enabled 24/7 global operations and unlocked 3 major markets

“It’s not a module or a feature; it’s a platform. And it’s the industry’s first natural language CRM” — Simon Lidzén, CEO, quoted fom iGaming Future

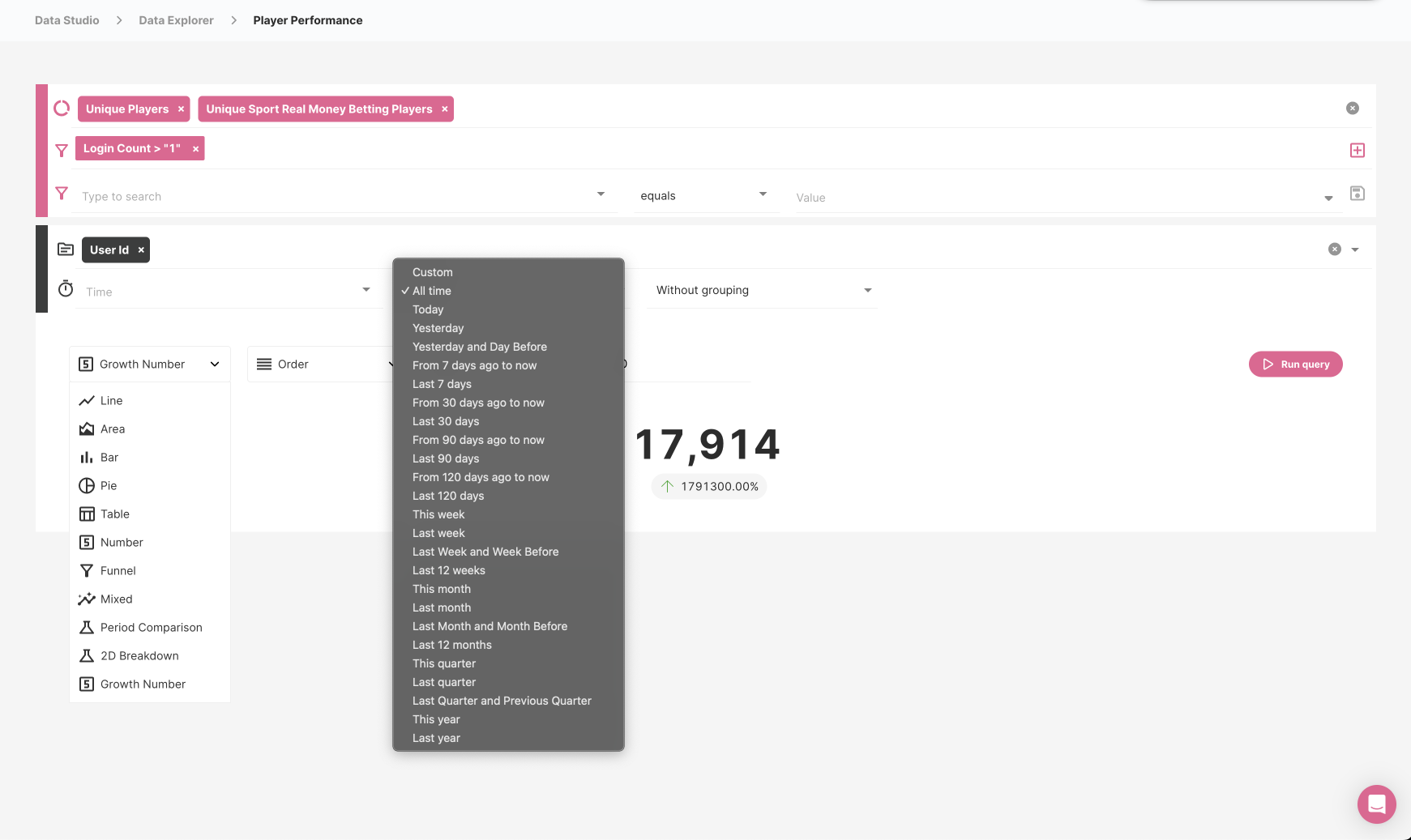

Decision: AI as amplifier of existing platform, not a parallel system that would fragment user workflows

Rationale: I advocated for integration over isolation from the earliest strategy sessions. Adding AI as a separate product would create two ways to do everything, neither complete, forcing users to context-switch constantly and never build confidence in either path. Instead, I designed AI to use existing modals and patterns wherever possible, so the new capability felt like a natural extension of what partners already knew.

Result: Partners adopted AI alongside existing workflows immediately. When AI creates activities, it populates the same Activity modal users already know, complete with segment targeting, market selection, and timing configuration. New entry point, familiar execution, no retraining required.

Decision: Single AI interface in the main navigation rather than contextual AI buttons scattered throughout the platform

Rationale: I evaluated three options during the strategy phase: a global command palette similar to Spotlight that could be invoked anywhere, contextual AI buttons on every relevant screen, or a dedicated AI space accessed from the main navigation. I chose dedicated space because enterprise users committing real budgets needed clear scope boundaries. Scattered entry points would create confusion about what AI could do in each context.

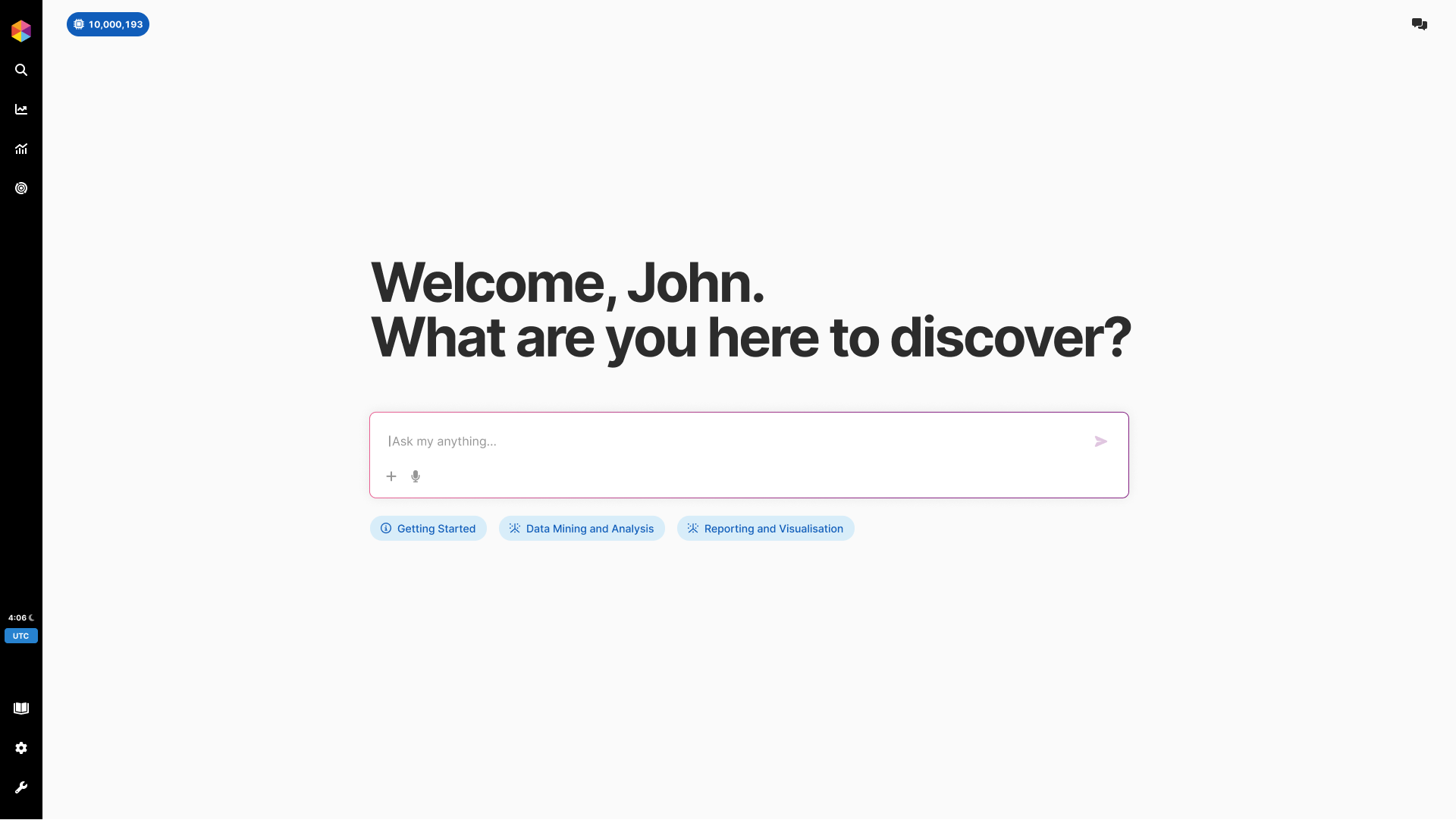

Result: Users understand AI as a distinct capability with clear boundaries. The welcome screen chips communicate exactly what is possible before users type anything, preventing the frustration that comes from discovering limitations after attempting something.

Decision: Support text, voice dictation, and file uploads as input methods from the start

Rationale: Different users have different preferences and contexts. Some want to type precise queries, others prefer dictating while reviewing other screens, and some need to upload files like sales reports for AI analysis. Restricting to text-only would have limited adoption among users who find typing cumbersome or who work with external data sources.

Result: Voice transcription works reliably for users who prefer dictation, and file uploads enable workflows like generating detailed reports from uploaded PDFs that would be impossible with text-only input.

Decision: Constrain scope aggressively and communicate limits clearly upfront rather than letting users discover them through failure

Rationale: Early prototypes let AI attempt any query, which seemed user-friendly but caused problems in testing. Users were confused and frustrated when AI confidently attempted questions outside its actual capabilities. I redesigned the welcome experience to show specific capabilities through labeled chips (Data Mining & Analysis, Reporting & Visualisation) rather than open-ended "Ask anything" framing.

Result: Usage errors dropped significantly after the redesign. Partners understood what AI could and could not do before investing time, so their attempts were more likely to succeed and their overall impression more positive.

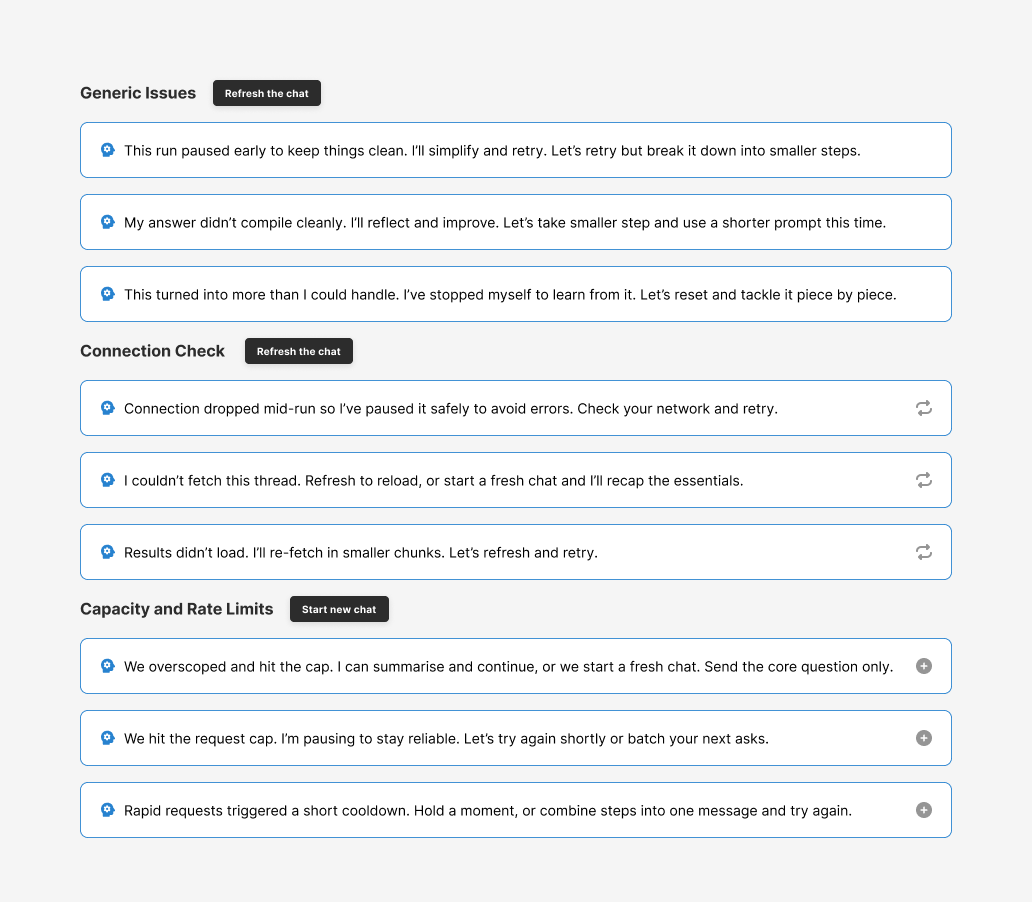

Decision: Errors as dialogue opportunities with clear recovery paths, not dead ends that require starting over

Rationale: Enterprise users committing real budgets need to trust the system even when it fails, because failures are inevitable with AI. I designed comprehensive error states where AI takes responsibility using first-person language ("I will simplify and retry", "This turned into more than I could handle") and always provides a specific recovery action. The tone is collaborative rather than blaming.

Result: Partners reported feeling in control even when things went wrong. Trust was maintained through transparency about what happened and clear guidance on what to do next.

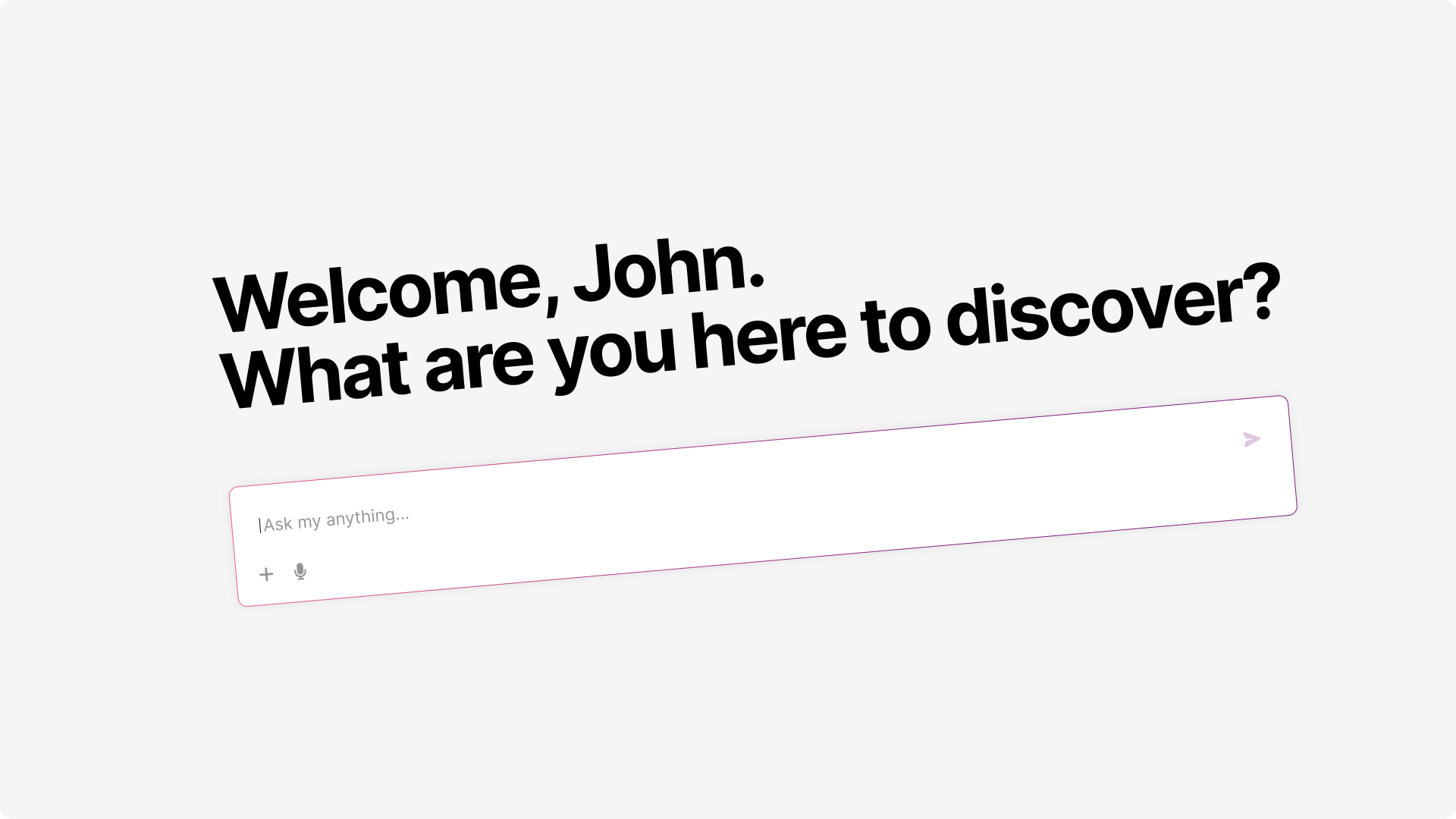

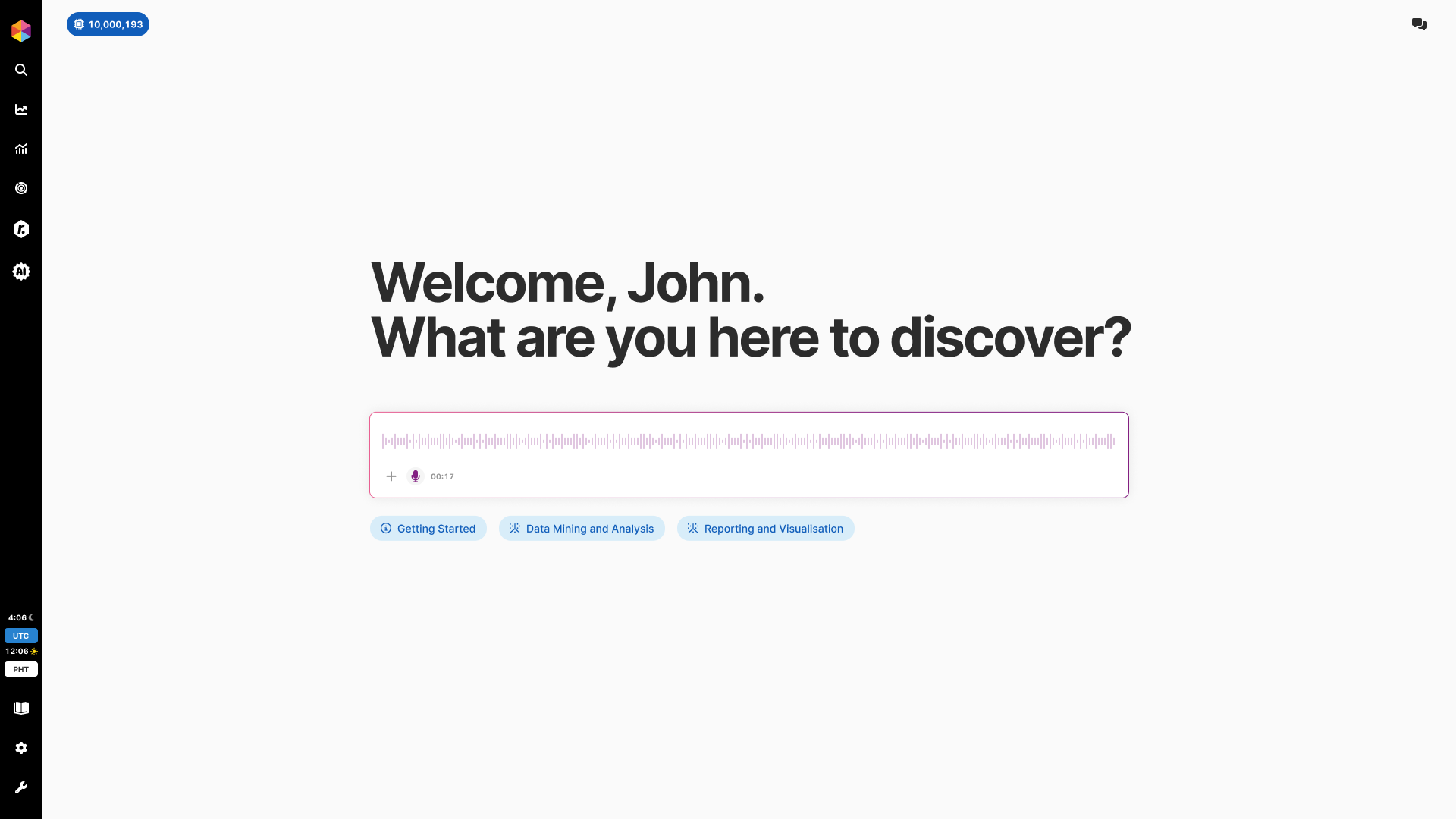

Clean welcome screen with personalized greeting, open input field supporting text entry, voice dictation via microphone button, and file attachments for document-based queries. Capability chips below the input serve dual purpose: quick-starts for common tasks and scope communication showing what the system can actually do. Persistent disclaimer about AI limitations visible from the start builds appropriate trust calibration.

Entry point balances invitation with honest scope communication, supporting text, voice, and file inputs

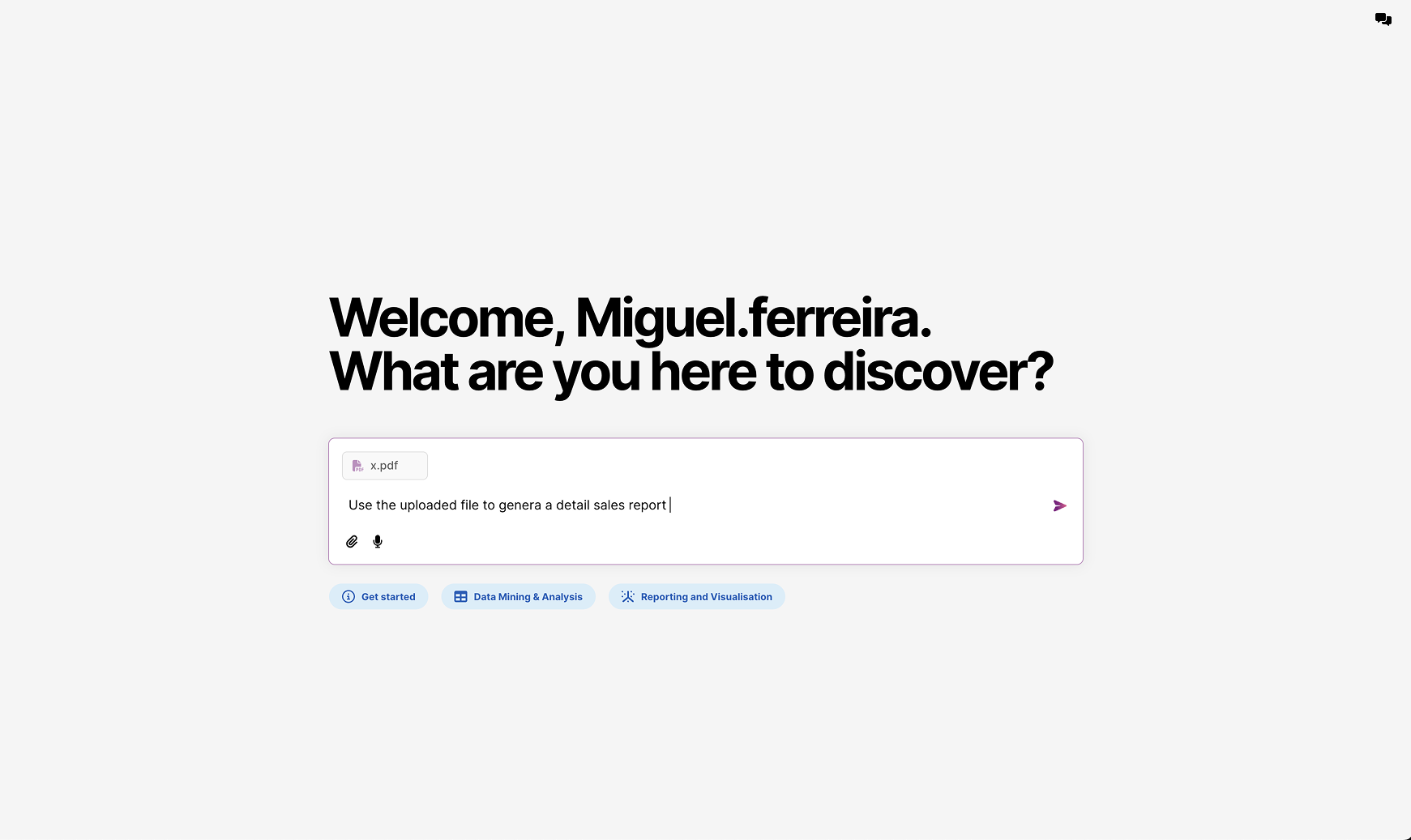

Users can attach files directly in the input field, enabling workflows like uploading a sales report PDF and requesting detailed analysis. The attached file appears as a chip above the input field, making it clear what context the AI will use. This extends AI utility beyond platform data to external documents that partners work with regularly.

File upload enables document-based queries, extending AI beyond platform data to external reports

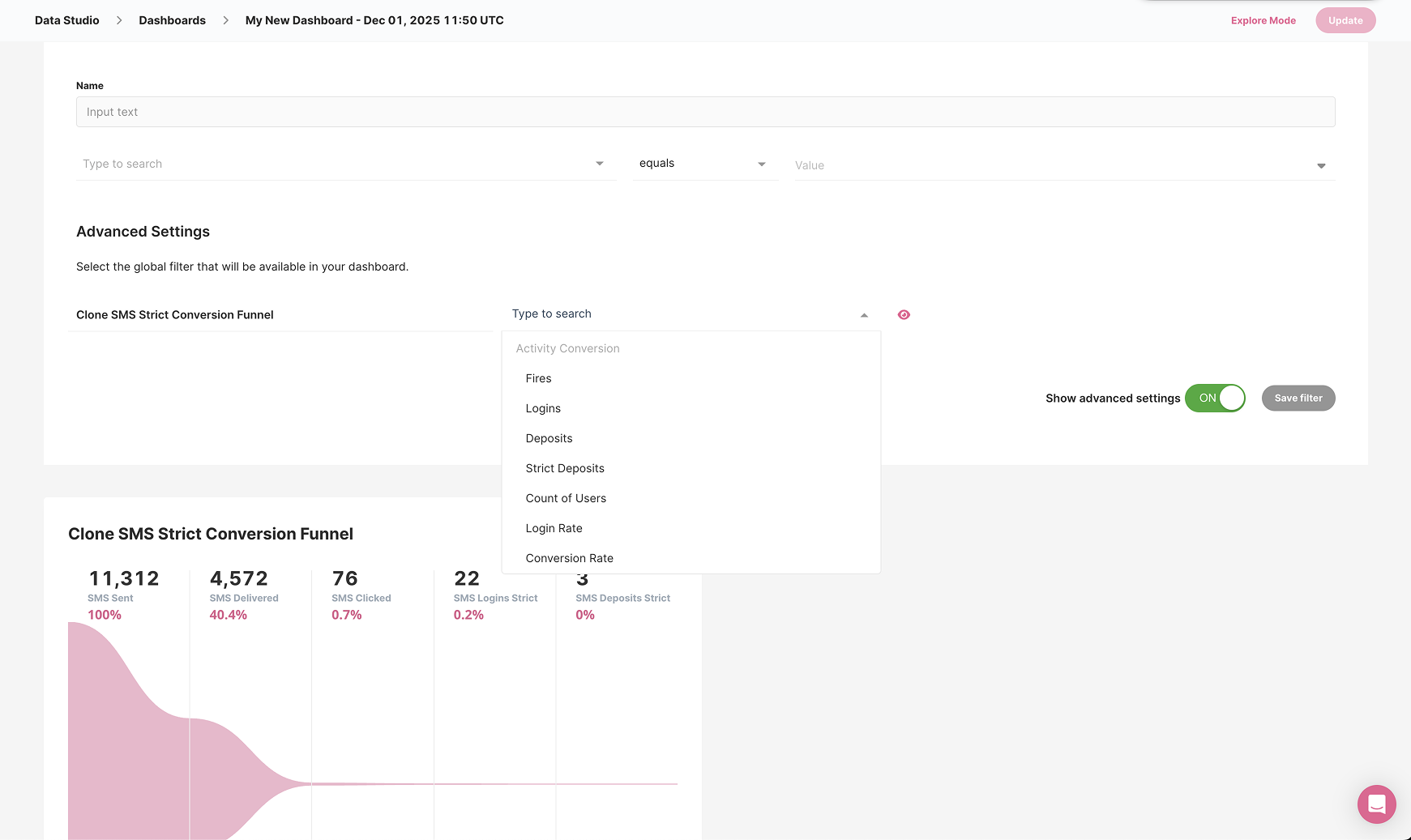

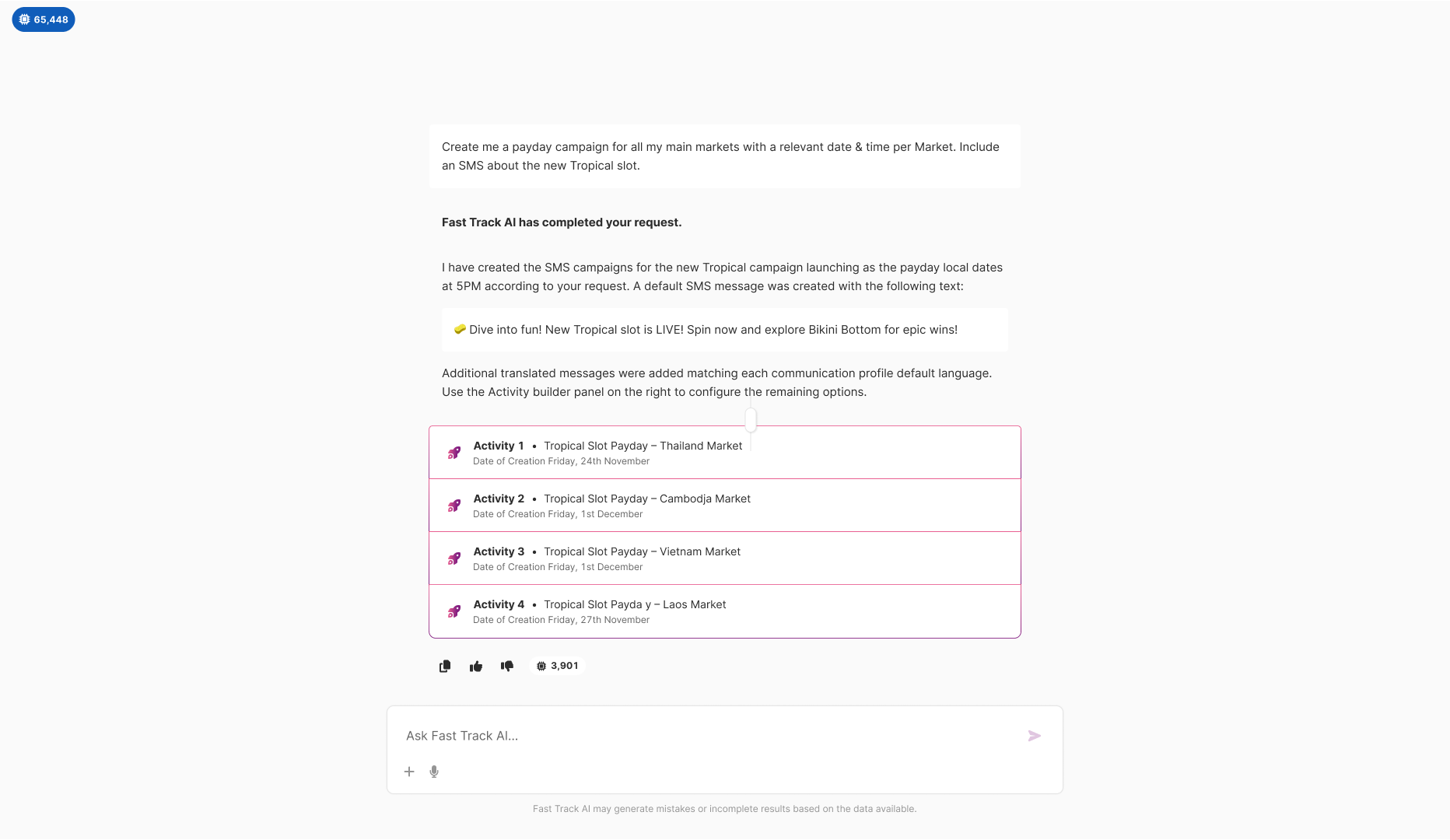

When asked to create campaigns, AI executes the request across multiple markets and shows structured confirmation: what was created, which markets were targeted with country flags for visual scanning, scheduled dates per activity, and the generated content including translated SMS messages. Created items are clickable for direct navigation. Token usage displayed at bottom provides cost transparency without interrupting the flow.

AI that acts: Creates campaigns across markets with automatic content generation and translation, showing exactly what was built

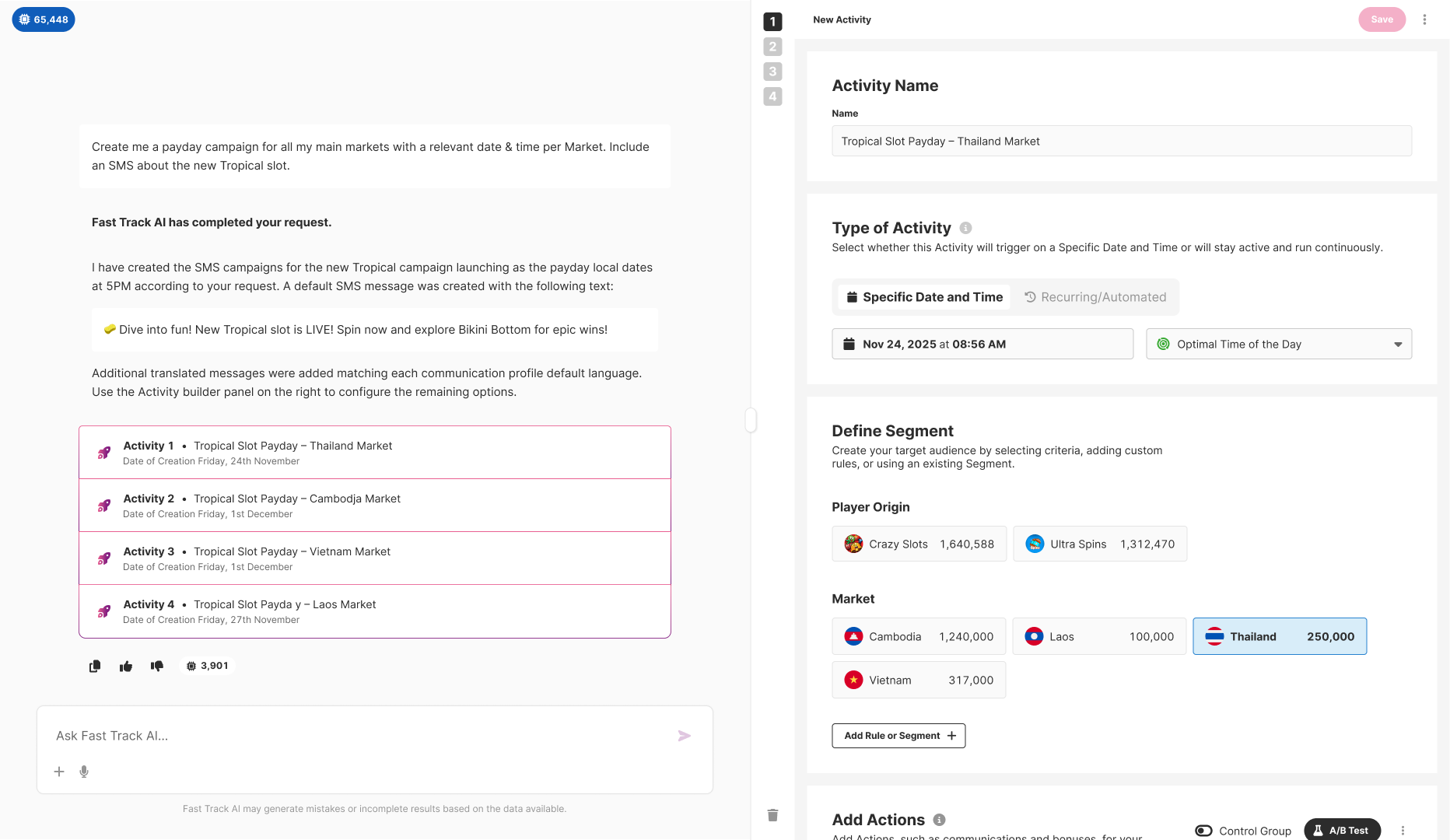

AI-generated content populates existing platform modals rather than creating new confirmation interfaces. Campaign creation uses the same Activity modal users already know, pre-filled with AI-generated settings including activity name, type, trigger date, segment targeting with player origins and markets, and placeholder for actions. Users can review, modify any field, and save using familiar patterns. AI handles the complexity of configuration while existing UI handles the trust of final confirmation.

Extension pattern: AI output integrates with existing modal, keeping users in familiar territory for final review and confirmation

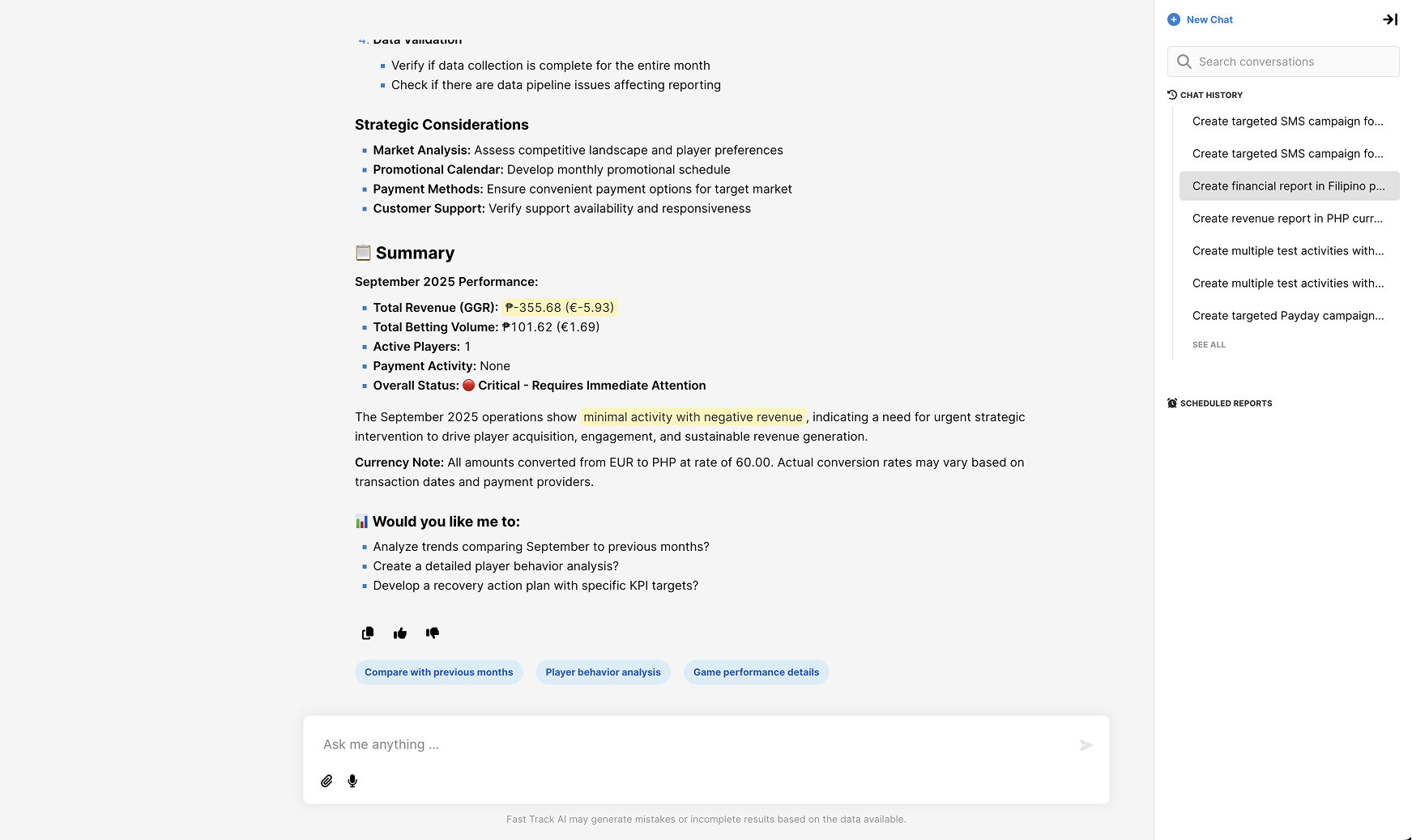

Complex analyses return structured reports with clear visual hierarchy: summary sections with key metrics, highlighted values for critical findings (revenue figures, status indicators using color-coded badges), strategic recommendations with specific next steps, and suggested follow-up queries as clickable chips. Chat history preserved in sidebar shows previous queries for context continuity. The goal is actionable insight that tells users what to do, not just raw data requiring interpretation.

Actionable insights: Key metrics highlighted, status indicators color-coded, strategic recommendations provided, one-click follow-ups available

Categorized error states for generic issues (query too complex, compilation failures), connection problems (dropped mid-run, fetch failures), and capacity limits (token caps, rate limiting). Each error type uses conversational first-person language where AI takes responsibility ("I will simplify and retry", "I have stopped myself to learn from it") and provides a specific recovery action with a clear button. Every error is a path forward with guidance, not a wall.

Trust infrastructure: Every error has a conversational explanation and a specific recovery path, AI takes responsibility

Strategy: Participated in ideation sessions during weeks one through three. Defined scope boundaries early with reporting and analysis as Phase 1 focus, campaign creation as Phase 2. Established extension architecture over replacement as the core principle.

Research: Used LangChain testing sessions as design research, treating every failure mode as a requirement for error handling. Partner feedback from adjacent features informed expectations about what CRM teams wanted to do but could not. No formal user research timeline was available given speed requirements, so we relied on internal expertise and rapid iteration.

Design: Rapid prototyping with daily engineering feedback kept design and development synchronized. Focused on trust patterns first including error handling, scope communication, and confirmation flows because these had to be right from day one. Visual polish was explicitly deferred to post-MVP iteration.

Development: Eight-week sprint with continuous designer-engineer collaboration. Decisions made in prototype reviews rather than specification handoffs, accelerating the feedback loop. Scope adjusted weekly based on technical reality and testing findings.

Validation: Rolled out platform-wide to all live partners in September 2025 with monitoring. Feedback collected via Slack and direct partner communication. Observed roughly 25% of partners active within first days and sticky daily usage patterns.

Post-launch: Featured in iGaming Future and reviewed by EngageHut following launch. Phase 2 campaign creation capabilities now released. Continuous iteration based on usage data and partner feedback continues.

“What used to take days passing between CRM and BI now happens in minutes. We can correct any result instantly and move on.”

Strategic Insight:

The real product is not the AI capability itself but the confidence users feel when using it. Partners commit real budgets through this system, targeting real players with real money at stake.

Every design decision must answer: does the user feel in control? Do they understand what will happen before it happens? Can they recover from mistakes without losing their work or their trust?

AI capability means nothing without the trust infrastructure to support it. The goal is empowering CRM professionals to unleash their creativity without the constant technical barriers that have historically slowed them down.